PATS (Pose, Audio, Transcript, Style) Dataset

PATS was collected to study correlation of co-speech gestures with audio and text signals. The dataset consists of a diverse and large amount of aligned pose, audio and transcripts. With this dataset, we hope to provide a benchmark which would help develop technologies for virtual agents which generate natural and relevant gestures.

The Dataset Contains:

- 25 Speakers with different Styles

- Includes 10 speakers from Ginosar, et al. (CVPR 2019)

- 15 talk show hosts, 5 lecturers, 3 YouTubers, and 2 televangelists

- Around ~ 84000 intervals

- Mean: 10.7s per interval

- Standard Deviation: 13.5s per interval

The dataset is available to download here: PATS Dataset Website.

Three modalities -i.e. Pose, Audio, Transcriptions- in many Styles available in the PATS dataset present a unique opportunity for the following tasks:

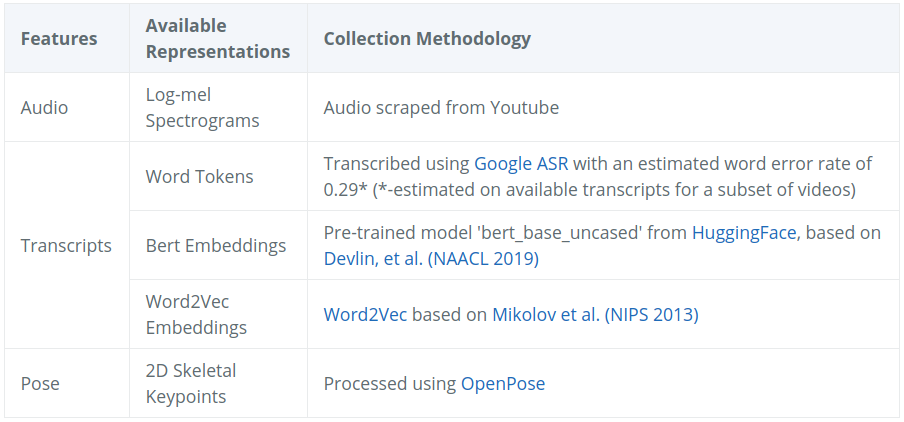

The following features are available:

The speakers in PATS have diverse lexical content in their transcripts along with diverse gestures. The following graphs will help you navigate through the speakers in the dataset, should you want to work with specific speakers with a different gesture and/or lexical diversities.

Fig 1 shows speakers clustered hierarchically based on the content of their transcripts and Fig 2 shows each speaker’s position on a lexical diversity vs spatial extent plot.

As shown in Fig. 1, speakers in the same domain (i.e. TV Show Hosts) share similar language, as demonstrated in the clusters. Furthermore, in Fig. 2, we can see that TV show speakers are generally more expressive with their hands and and words while Televangelists are less so. Speakers on the top right corner of Fig 2, are more challenging to model in the task of gesture generation as they have a greater diversity of vocabulary as well as gestures.