MultiSense Report

The MultiSense report is a line of work aiming to bring the multimodal analyses of clinical dialogues to the clinician for use in daily evaluations. Through our automated analyses, the MultiComp Lab has established behavior markers for gaze aversion, language, vocal features, and facial expression, and the MultiSense report brings that information together into a human-interpretable report for clinical use.

The report is broken up into several sections.

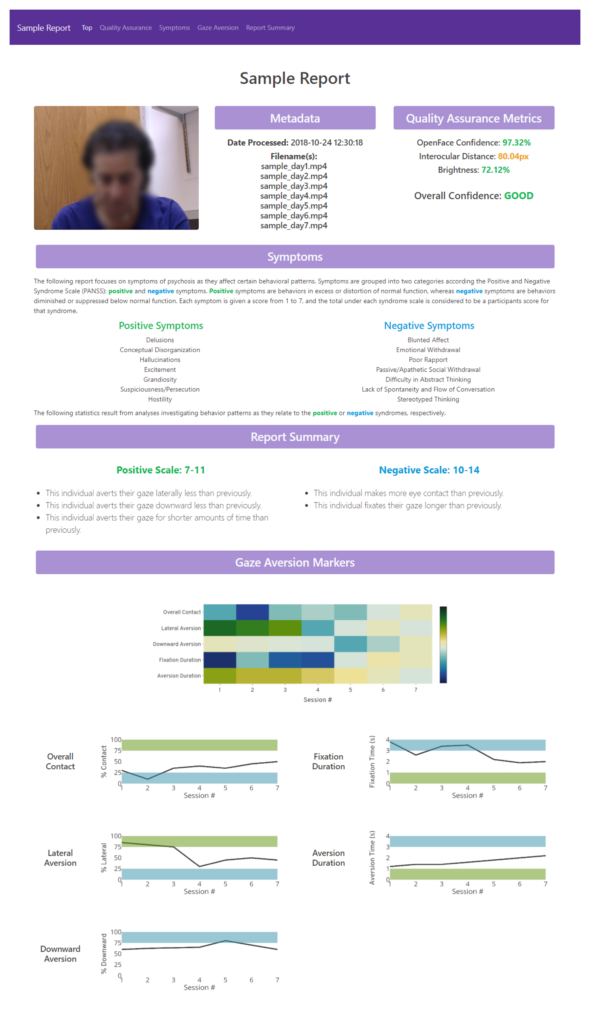

- An image screenshot and metadata information aids in record-keeping.

- Quality assurance metrics give the user a clear picture of how confident is the software behind the analyses. This is a rough indicator of how trustworthy the analysis may be, based on the quality of this particular set of recordings.

- The report includes a brief description of the symptoms examined within these analyses, explaining how they are classified and introducing a color key for the different scales. Throughout the report, blue values indicate negative symptoms, and green values indicate positive symptoms.

- The report includes a summary metric, indicating the system’s overall prediction for the positive and negative symptom severity scores. This estimation is predicted using a neural network on the behavior markers displayed previously in the report.

- Each prediction is underscored by a series of interpreted observations of the previously reported behavior markers. These observations point out significant behavior changes that contribute to the overall predicted symptom severity score.

- The report consists of a series of behavior marker modules — in this case, only one for gaze aversion. Each module contains a set of analyses and statistics about a certain type of behavior expressed by the individual in the sessions.