OpenFace: An Open Source Facial Behavior Analysis Toolkit

T. Baltrušaitis, P. Robinson and L.-P. Morency, OpenFace: An Open Source Facial Behavior Analysis Toolkit, In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), 2016

Publications

As an important channel of nonverbal communication, the facial reveals a wealth of information about an individual’s affective and cognitive state (e.g., emotions, intentions, and engagement). Thus, automated analysis of facial behavior has the potential to enhance fields ranging from human-computer interaction and consumer electronics to science and healthcare.

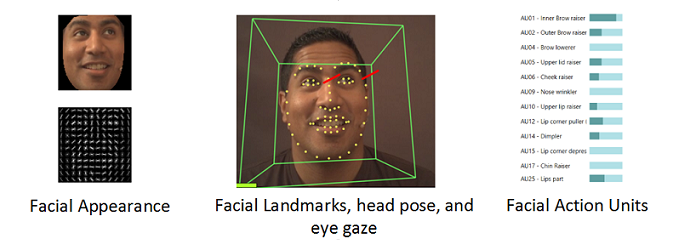

Our work explores all aspects of facial behavior including head pose, head motion, facial expression, and eye gaze. For example, automatic detection and analysis of facial Action Units is an essential building block in nonverbal behavior and emotion recognition systems. In addition to Action Units, head pose and gesture also play a significant role in emotion and social signal perception and expression. Finally, gaze direction is important when evaluating things like attentiveness, social skills, and mental health, as well as the intensity of emotions. In our work, we develop methods to analyze the modalities mentioned above, especially in challenging real-world environments.