Grounded Language Learning

One of the long-standing goals of artificial intelligence research is enabling humans to communicate with machines using natural language interfaces. The fundamental problem of achieving this is grounded language learning. Grounded language learning is the task of learning the meaning of natural language units (e.g., utterances, phrases, or words) by leveraging the sensory data (e.g., an image). Grounded language learning is a challenging task from a computational perspective due to the inherent ambiguity in natural language and the imperfect sensory data.

We study grounded language learning in the context of learning an interpretable model for referring expressions, i.e., localizing a visual object described by a natural language expression. We propose GroundNet, a dynamic neural architecture for localizing objects mentioned in a referring expression for an image. Our model takes advantage of natural language compositionality to improve interpretability but can maintain high predictive accuracy. Critically, our approach relies on a syntactic analysis of the input referring expression to shape the computation graph. We find that this form of inductive bias helpfully constrains the learned model’s interpretation, but proves not to be overly restrictive. An important intermediate step for grounding referring expressions is the localization of supporting object mentions. Our experiments on the GoogleRef dataset show that GroundNet successfully identifies intermediate supporting objects while maintaining comparable performance to state-of-the-art approaches.

Publications

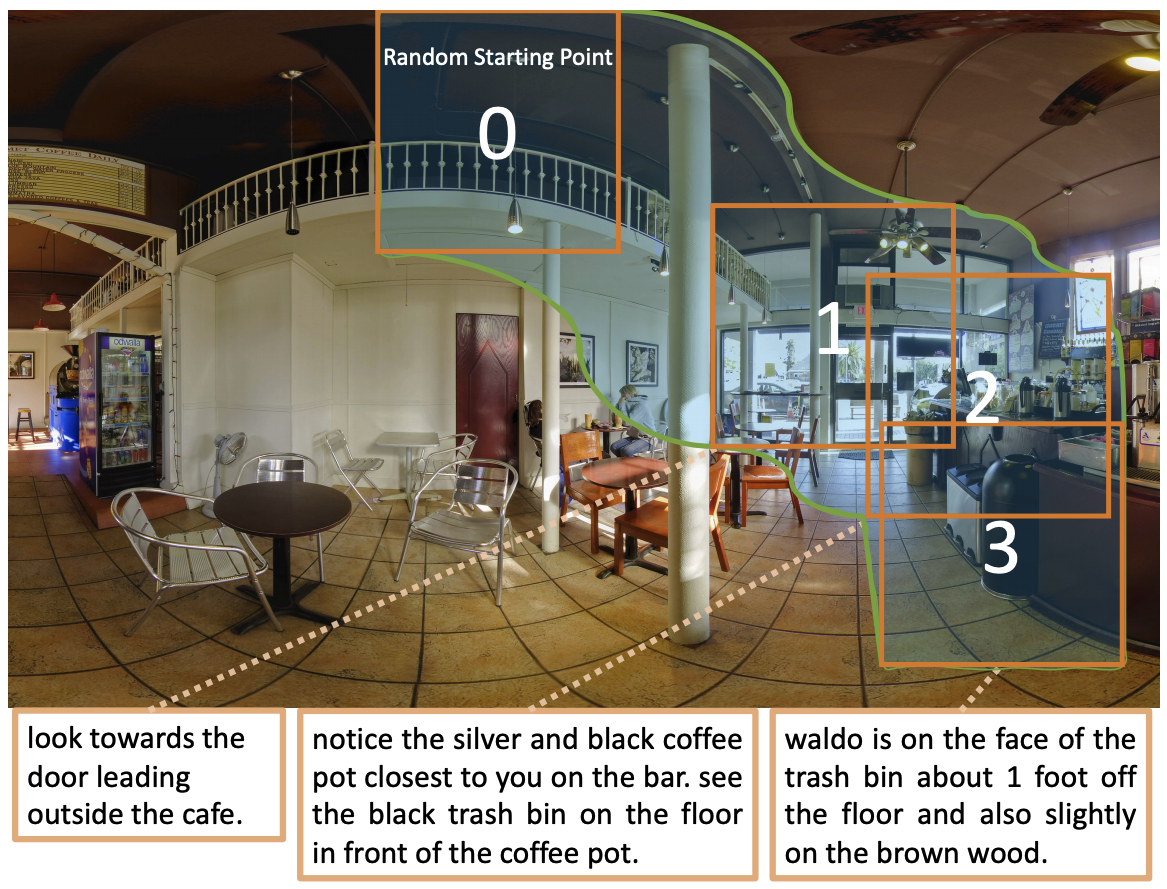

V. Cirik, T. Berg-Kirkpatrick, L.-P. Morency. Refer360°: A Referring Expression Recognition Dataset in 360° Images, ACL 2020

Demo & Code VideosD. Fried*, R. Hu*, V. Cirik*, A. Rohrbach, J. Andreas, L.-P. Morency, T. Berg-Kirkpatrick, K. Saenko, D. Klein, T. Darrell. Speaker-Follower Models for Vision-and-Language Navigation. NeurIPS 2018

Demo & CodeV.Cirik, L.-P.Morency, T. Berg-Kirkpatrick. Visual Referring Expression Recognition: What Do Systems Actually Learn? NAACL 2018

Demo & CodeV. Cirik, L.-P. Morency. Using Syntax to Ground Referring Expressions in Natural Images. AAAI 2018

Demo & CodeYu, S. Zhang and L.-P. Morency, Unsupervised Text Recap Extraction for TV Series, In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2016

V. Cirik, L.-P. Morency and E. Hovy, Chess Q&A : Question Answering on Chess Games, NIPS Workshop on Reasoning, Attention, Memory (RAM), 2015

Demo & Code