Mission

MultiComp Lab’s mission is to build the algorithms and computational foundation to understand the interdependence between human verbal, visual, and vocal behaviors expressed during social communicative interactions.

Artificial Social Intelligence

- Visual, vocal and verbal behaviors

- Dyadic and group interactions

- Learning and children behaviors

We build the computational foundations to enable computers with the abilities to analyze, recognize, and predict subtle human communicative dynamics (behavioral, multimodal, interpersonal and societal dynamics) during social interactions.

Multimodal Machine Learning

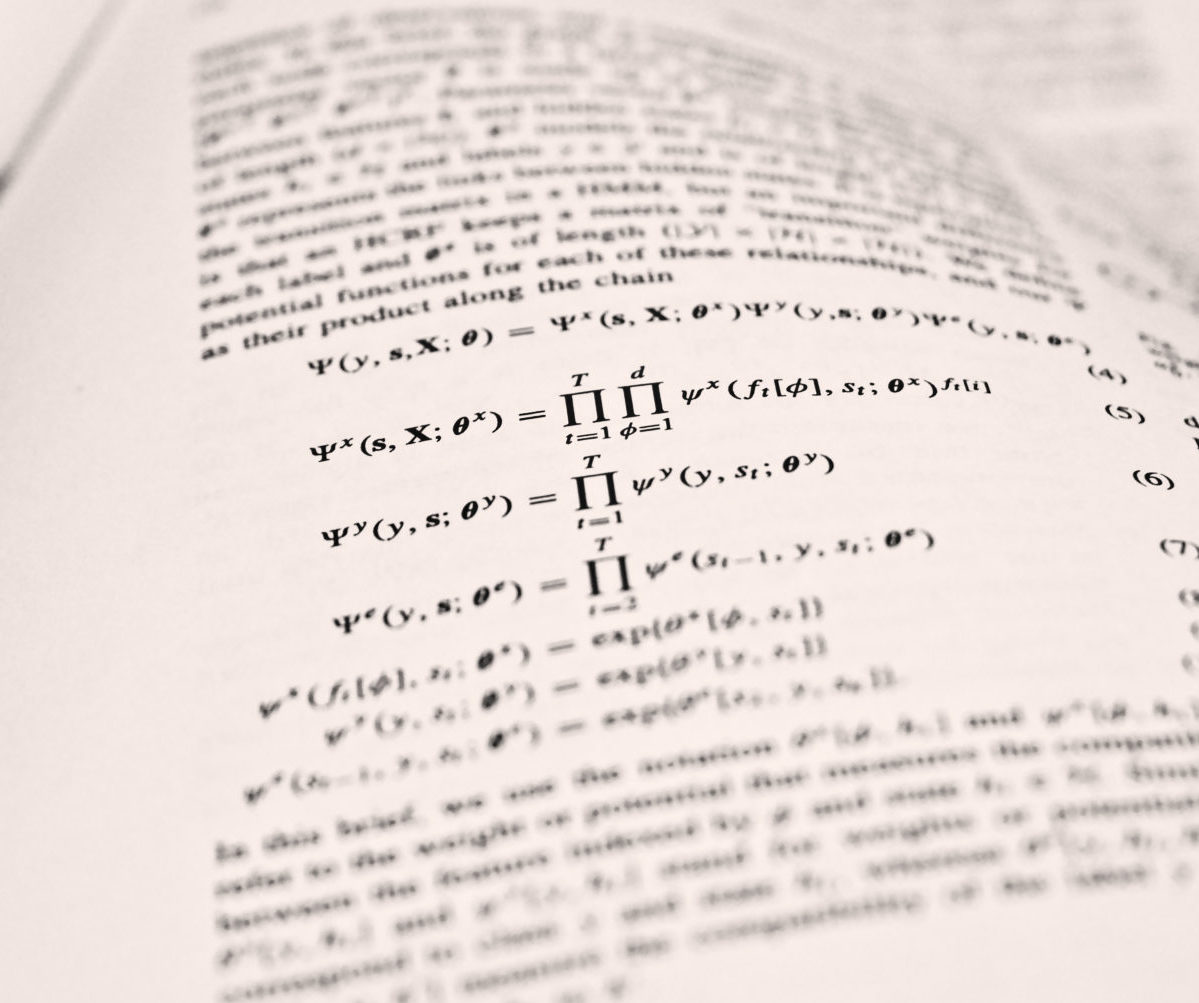

- Multimodal representation learning

- Temporal sequential models

- Deep neural architectures

We create algorithms, probabilistic models, and neural architectures to better understand the interdependence and complementarity present in heterogeneous data originating from multiple modalities (language, speech, and vision).

Health Behavior Informatics

- Behavior markers of mental health

- Depression, PTSD, psychosis, suicide

- Tele-medicine and clinical interviews

We create decision support tool technologies for clinicians and healthcare providers to help during diagnosis and treatment of mental health illnesses by automatically identifying behavior markers based on the patient’s verbal and nonverbal behaviors.

About

The Multimodal Communication and Machine Learning Laboratory (MultiComp Lab) is headed by Dr. Louis-Philippe Morency at the Language Technologies Institute of Carnegie Mellon University. MultiComp Lab exemplifies the strength of multi-disciplinary research by integrating expertise from machine learning, computer vision, speech and natural language processing, affective computing, and social psychology. Our research methodology relies on two fundamental pillars: (1) deep understanding of the mathematical models and neural architectures involved in multimodal perception, modeling and generation, and (2) comprehensive knowledge of the emotional, cognitive and social components of dyadic and small group interactions. Our algorithms and computational models enable technologies to support real-world applications such as helping healthcare practitioners by automatically detecting mental health behavior markers or enabling effective collaborations between remote-learning students by moderating group dynamics.

Latest News

March 2018

Call for papers for the first workshop on Computational Modeling of Human Multimodal Language @ACL Read More

February 2018

Dr. Morency receives Finmeccanica Career Development Professorship in Computer Science Read More

January 2018

Commencing two reading groups for Spring 2018 Read More